Stop Asking Chat GPT for Business Ideas: The Case for Solving Internal Pain

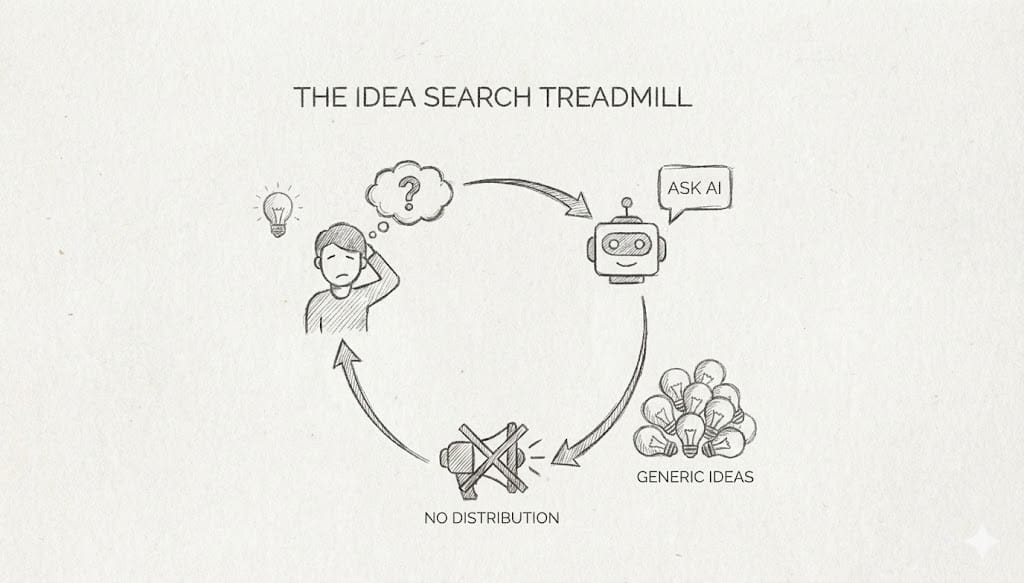

Looking for business ideas is one of the most energizing and exhausting processes known to mankind. I feel like I’ve been searching for "what’s next" for ages, probably consuming the equivalent of Taylor Swift’s carbon footprint just by asking ChatGPT to generate terrible startup ideas ... only to tell it it's clearly an idiot when it doesn't agree with me.

I get a little upset when I see internet gurus gaining huge followings by throwing out generic business ideas or stuff that sounds plausibly good but without doing a metric ton of work, would be impossible to say if its a good idea or not. Its idea porn. The problem isn't that the ideas are bad—it’s that an idea without you in it is impossible to validate. Who would you sell it to? How would you reach them?

Since i got blacklisted on X and Linkedin (my reach is atrocious now) I swear I could literally have the cure for cancer in my garage, and it would still take me six months to get anyone to listen to me. So, swimming in a "Red Ocean" with zero distribution advantage, pulling a generic idea off TikTok from a guy who has never actually started a software company? That’s a suicide mission. And I've been trying that over and over and over again for years.

The "Distribution Advantage" (It’s Closer Than You Think)

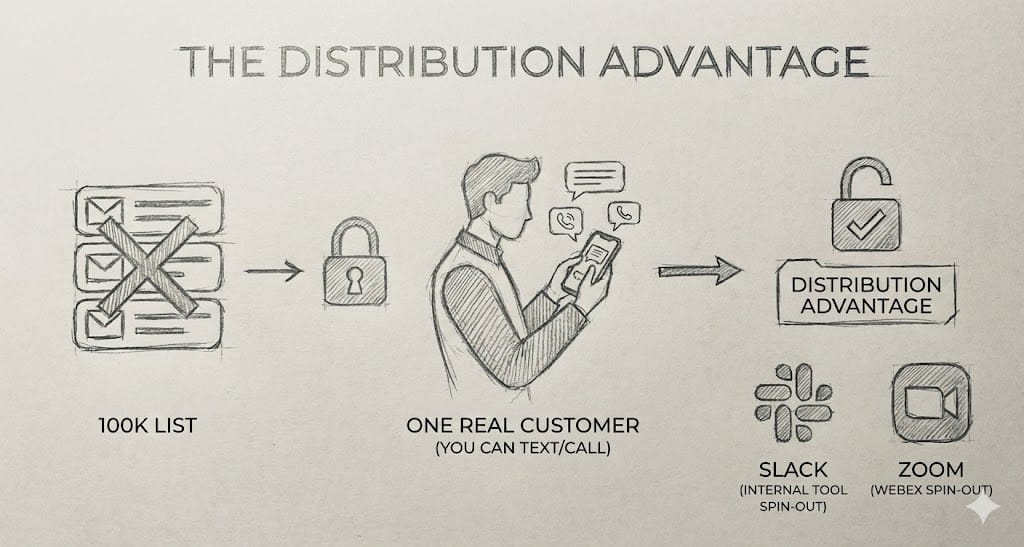

Recently, I stopped looking for "The Big Idea" and started looking for "an idea with a customer".

Usually, people think distribution means an email list of 100k people. But often, a distribution advantage just means one real customer you can text, call, or slack (preferably just see in person or text). It means finding a problem where you are right now, with the people you already have access to.

There's some good company in this camp of the "internal tool spin-outs":

- Slack: Started as an internal tool for a game studio (Tiny Speck). The game failed; the chat tool took over the world.

- Zoom: Eric Yuan was at WebEx. He saw the mobile/video shortcomings, couldn't get internal buy-in, and left to build the solution he knew his former customers needed.

Finding the Pain at Motion

I’m currently building new product at Motion. The company is full of A-players, literally the best team I’ve ever worked with. But even A-players run into problems that don't have good solutions. And not all solutions SHOULD be solved by that team!

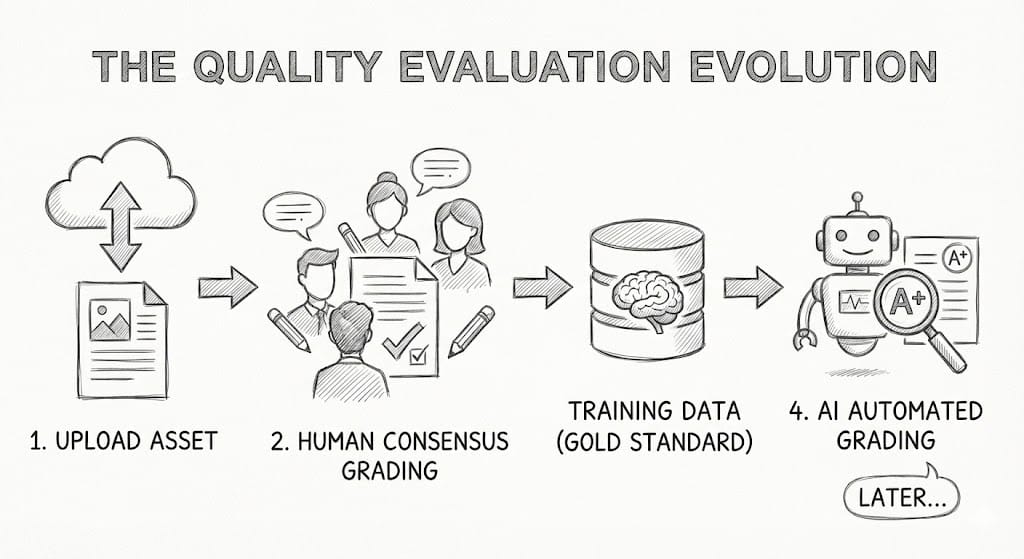

Two weeks ago, I caught an action item at the end of a meeting that sounded miserable (for me and the others on the team). I was going to have me take screenshots of the llm output each day locally, post it into slack, and then pass a doc around for like 3-5 people to grade the output. The idea was over time we'd know if we were getting better or worse.

It sparked an idea for a tool that my team is now using nearly every day.

The Problem: Grading AI Output

We are working on a new project involving AI-generated text and images. The problem is how the hell to score it! Is it "good" "good enough" "bad" ... for which set of inputs? Its impossible to say! Of course sometimes it's obvious but when the output eventually becomes "good enough" it becomes far less obvious if its improving.

I come from a Computer Vision background. Computer Vision is comforting because it’s generally deterministic. If I show a model a picture of a puppy, it draws a box around the puppy. If I show it the same puppy image, I get the same box.

LLMs are different. They are non-deterministic. You can ofc force determinism (set temperature to 0 for instance), but that tends to lobotomize the model's intelligence. In practice, you put in the same prompt, you get a different result.

This makes testing a nightmare. and it makes automated qualitative testing very difficult

I was manually generating outputs, sending them via Slack to a PM, and they were putting grades in a spreadsheet. I was changing prompts left and right, but because the evaluation was so manual and subjective, I had no way of knowing if I was actually making the product better, worse or just different.

The "Quality Layer" Belongs to Product

This reminded me of a struggle I had last year trying to get AI to summarize police body-cam footage. Summarizing a chaotic video is incredibly easy for a human but insanely hard for an LLM. "Good" is subjective. A summary useful for a police sergeant is different from a summary useful for a defense attorney.

So, I looked at my team at Motion. We have experts on text, experts on composition, and experts on product.

- The Developers (me) shouldn't be deciding if the AI output is "good."

- The Product Team should be deciding that.

So, one morning at an hour that technically qualifies as 'WTF,' unable to sleep, I raided my own GitHub scrap yard. I grabbed a failed Supabase project—a relic from a previous idea that died because I had zero distribution—stripped it for parts, and built this idea out in a few hours. The goal was simple: let non-technical stakeholders provide qualitative feedback on AI outputs. It's like tinder for ai outputs (lol)

Lately I’ve been thinking about how every AI team struggles with the same problem:

— Andrew Pierno (@AndrewPierno) December 8, 2025

we ship new prompts constantly, but we have no simple way to measure if the product is actually getting better.

Evals are too technical for non tech stakeholders.

So I’m hacking on a small side…

It’s an evaluation platform. Now, instead of spreadsheets and gut feelings, the tool offers a pipeline, and some data! But more importantly, I have a validated use case with one real "customer" (my coworkers) for a little side project, and I didn't have to cold-email a single soul to find them.

Stop Searching, Start Listening

Of course, who knows if anyone else outside of motion has a use case for it. I bet they do, but the point is that I built a product that 1 whole team at a high growth startup is using to solve a real, hard problem, and that is rad!

If you are looking for what to build, stop asking Chat GPT. Look at your "Action Items" from your last meeting. Look at what your coworkers are complaining about. Look for something that sucks at work.

There is likely a tool you can build right now that solves a headache for the person sitting next to you. If they love it, chances are someone at another company will too. And if they don't? Well, at least you didn't spend six months trying to build a cure for cancer in your garage.

thanks for reading ... im off to go find some MRR.